Summary:

State AGs are enforcing regulations on cybersecurity and AI, focusing on consumer protection and data privacy. Major actions include settlements with T-Mobile and Google, highlighting risks for companies. Future enforcement will target AI technologies, ensuring compliance with state laws.

Original Link:

Original Article:

State attorneys general are actively enforcing regulatory actions on cybersecurity and artificial intelligence, focusing on consumer protection and data privacy. Significant enforcement examples highlight this trend: the California AG secured a settlement with Blackbaud over inadequate security measures, while the Washington AG took legal action against T-Mobile for data breach violations. In the AI space, several state AGs have issued legal advisories that clarify how existing consumer protection laws apply to AI technologies. California and Texas are leading formal investigations into documented privacy violations by AI model developers.

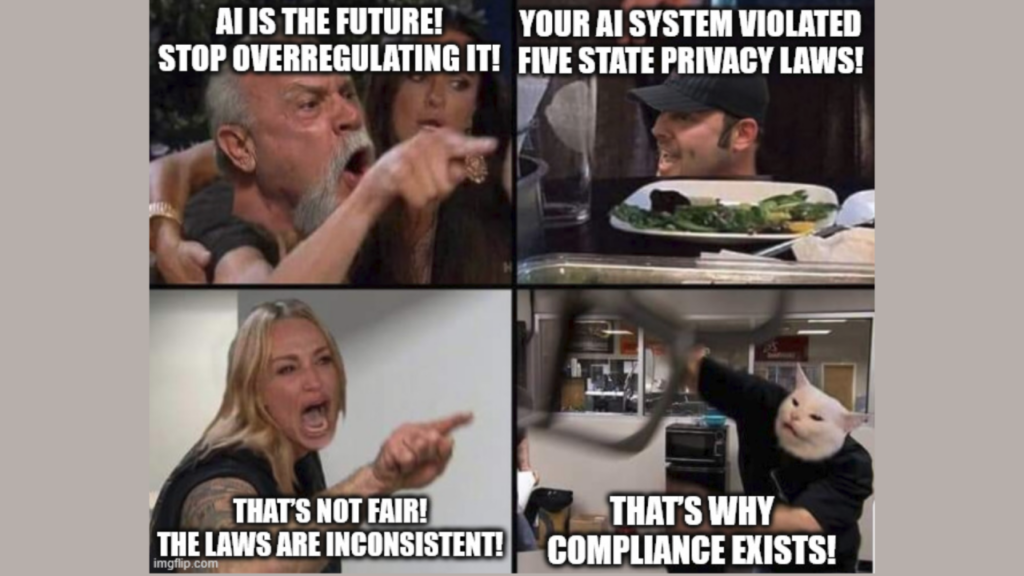

This enforcement surge creates clear legal and financial risks for companies across industries. Organizations that fail to comply with state-specific privacy and data protection laws face monetary penalties and reputational damage from public enforcement actions. Companies must navigate different requirements across state jurisdictions, each with independent enforcement authority. The establishment of dedicated privacy enforcement units in California, Texas, Virginia, and New Hampshire signals a shift toward more systematic and aggressive enforcement rather than occasional actions.

State attorneys general have expanded their enforcement priorities beyond traditional data breaches to include algorithmic accountability. Their investigations now scrutinize how AI systems make decisions affecting consumers, focusing particularly on instances of bias and discrimination. This enforcement approach requires companies to implement specific technical and operational safeguards, including algorithmic impact assessments, bias testing protocols, and transparent documentation of AI decision-making processes. These requirements constitute concrete compliance obligations rather than theoretical concerns.

State-led investigations into AI-related privacy violations present specific compliance challenges for businesses using artificial intelligence technologies. These investigations identify violations of state privacy laws, as shown by the Texas AG’s investigation into DeepSeek’s AI model for alleged violations of the Texas Data Privacy and Security Act. This case shows how state AGs are applying existing legal frameworks to new technologies with real consequences. Businesses must assess their AI systems against the specific requirements of consumer protection, anti-discrimination, and data security laws in each relevant state.

AI technologies process extensive personal data through technical mechanisms that trigger regulatory requirements under various state laws. Companies face a patchwork of regulations with meaningful differences in requirements for data collection, processing limitations, and consumer rights. California’s CCPA/CPRA, Virginia’s CDPA, and Colorado’s CPA each contain distinct provisions regarding automated decision-making and profiling that directly impact AI deployments.

The implications of this increased scrutiny are both multifaceted and tangible. Companies already face specific legal challenges and substantial financial penalties when their cybersecurity controls fail to meet standards. Organizations must implement comprehensive compliance frameworks that address both technical security measures and ethical considerations in AI deployment, including verifiable controls for data protection, algorithm fairness, and operational transparency.

Case Example: Washington State vs. T-Mobile

Washington State Attorney General Bob Ferguson filed a consumer protection lawsuit against T-Mobile for failing to secure the personal information of over 2 million Washington residents. The lawsuit demonstrates how regulatory authorities are holding companies accountable for specific security failures, as T-Mobile allegedly ignored documented cybersecurity vulnerabilities for years while simultaneously making misleading claims about its data protection capabilities.

The breach, which occurred between March and August 2021, exposed the personal information of more than 79 million consumers nationwide, including highly sensitive data such as Social Security numbers, phone numbers, and addresses. T-Mobile’s subsequent notifications to affected consumers contained critical omissions and downplayed the breach’s severity, further compounding its legal exposure.

The lawsuit seeks civil penalties, consumer restitution, and mandated improvements to T-Mobile’s cybersecurity infrastructure and communication protocols.

Additional Legal Actions

The Massachusetts attorney general, alongside 40 other state attorneys general, secured a $391.5 million settlement with Google over deceptive location tracking practices that violated specific consumer protection statutes. Massachusetts received $9.3 million of this settlement, highlighting the financial consequences of privacy violations.

The Pennsylvania attorney general, coordinating with six other state attorneys general, reached an $8 million settlement with Wawa following a data breach that compromised approximately 34 million payment cards due to demonstrably inadequate security controls.

The New York attorney general participated in a Children’s Online Privacy Protection Act enforcement action against Google and YouTube, resulting in a $170 million national settlement, with $34 million allocated to New York. This case specifically addressed the unauthorized collection of children’s personal information without parental consent as required by law.

California Attorney General Rob Bonta announced a settlement with Blackbaud, a South Carolina software company providing data management for nonprofits. After a 2020 data breach, Blackbaud violated consumer protection and privacy laws through security failures. The company initially downplayed the breach before revealing compromised sensitive information, including Social Security and bank account numbers. Investigation found Blackbaud lacked basic security measures like multi-factor authentication. The $6.75 million settlement requires implementing comprehensive security controls, including enhanced password management, network segmentation, and continuous monitoring.

Future State AG Enforcement Priorities in AI

State attorneys general are poised to significantly expand their enforcement activities around emerging AI technologies in the coming years. Based on current trends and regulatory developments, several key enforcement priorities will likely emerge:

Generative AI and Content Authenticity

State AGs will increasingly target companies deploying generative AI without adequate safeguards against deepfakes and synthetic content. Enforcement will focus on consumer protection law violations when AI-generated content misrepresents products, services, or individuals without proper disclosure. Companies that fail to implement content provenance mechanisms or “watermarking” for AI-generated materials will face particular scrutiny.

Automated Decision Systems in Critical Sectors

Enforcement actions will intensify around AI systems making consequential decisions in housing, employment, healthcare, and financial services. State AGs will leverage existing anti-discrimination frameworks to address algorithmic bias, focusing especially on systems creating disparate impacts on protected classes. Companies should expect investigations requiring them to demonstrate rigorous testing protocols and impact assessments for high-risk AI applications.

Data Harvesting for AI Training

The collection and use of consumer data to train AI models will become a primary enforcement target. State AGs will pursue companies that scrape personal information without adequate notice, consent, or opt-out mechanisms. This enforcement will extend beyond traditional privacy frameworks to address novel harms specific to AI training data, including the extraction of biometric information and personal attributes from publicly available sources.

Cross-Border Data Flows and Model Sharing

As AI development becomes increasingly global, state AGs will scrutinize cross-border transfers of data used for AI training and deployment. Companies operating multi-state AI systems will face complex compliance challenges as AGs enforce state-specific requirements on model transparency, data minimization, and consumer rights. This will particularly impact cloud-based AI services operating across multiple jurisdictions.

These priorities reflect state AGs’ evolving approach to technology regulation, applying existing legal frameworks to novel AI applications while developing specialized expertise to address technical compliance issues. Companies developing or deploying AI technologies should prepare for more sophisticated investigations requiring detailed technical documentation of their AI systems and governance frameworks.

On December 24, 2024, Oregon’s Department of Justice released comprehensive guidance on applying existing legal frameworks to AI technologies. While Oregon lacks AI-specific legislation, the guidance firmly establishes that current consumer protection laws, including the Unlawful Trade Practices Act, Consumer Privacy Act, and Equality Act, directly govern AI applications. Companies operating in Oregon must implement systems ensuring AI transparency, accuracy, and fairness. The guidance requires explicit consent mechanisms for personal data use in AI models, clear processes for consent withdrawal, and formal Data Protection Assessments for high-risk AI activities.

California has enacted a robust suite of AI-focused laws taking effect January 1, 2025:

AB 2013: Requires AI developers to publish detailed training data information on their websites by January 2026, improving transparency.

AB 2905: Mandates explicit disclosure when telemarketing calls use AI-generated content, protecting consumers from deception.

SB 942: Requires AI developers to provide reliable detection tools for AI-generated content, enabling content verification.

AB 1831 and SB 1381: Expand criminal prohibitions on child pornography to include AI-generated content, closing a critical legal loophole.

SB 926: Extends criminal penalties to creators of non consensual deepfake pornography, addressing a growing digital threat.

SB 981: Requires social media platforms to create reporting mechanisms for California users to flag sexually explicit digital identity theft.

SB 1120: Ensures health insurers maintain licensed physician oversight of AI tools in healthcare, preserving medical standards.

Mitigating State AG Enforcement Risks: Practical Compliance Measures

To reduce enforcement risks from state attorneys general, companies should implement these specific, actionable measures:

Conduct documented quarterly data privacy audits

Develop state-specific compliance protocols

Create machine-readable privacy policies with clear consumer rights notices

Implement technically verified opt-out mechanisms on all digital properties

Keep auditable records of fulfilled opt-out requests